(this is part 7 of the “AI for Finance Course“)

Some people believe candlestick patterns help predict market movements, so let’s test it and see if they’re correct.

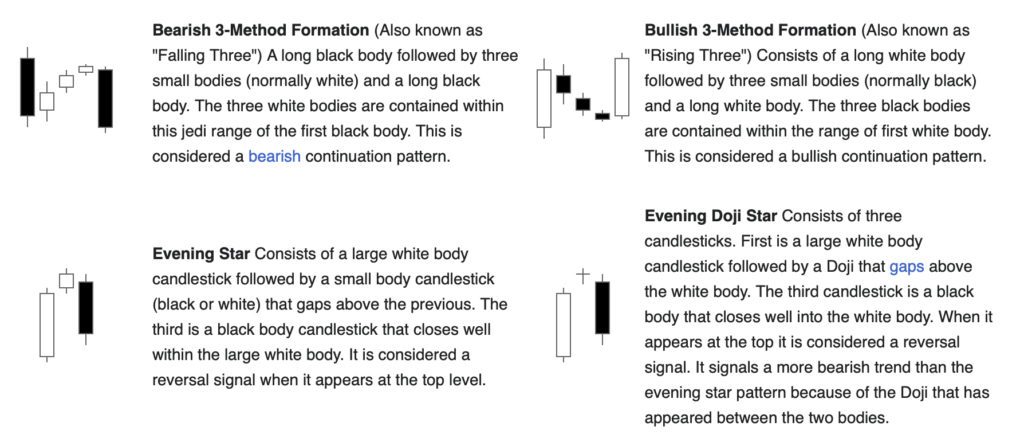

Here are some patterns mentioned on wikipedia:

(screenshot from wikipedia)

While these might hold value we’re not going to hardcode them. Instead, we’re going to let our neural net recognize them on its own.

Those patterns might include some or all listed on Wikipedia. But it may also include none.

The neural network might just learn brand new patterns specific to this asset and market conditions.

Key concepts

So far we passed a single candle to the network. But how do we pass multiple candles at once? And how do we tell the network which candle comes first and which one’s the last?

Short answer: we don’t.

We’ll let the network figure this out on its own.

INPUTS

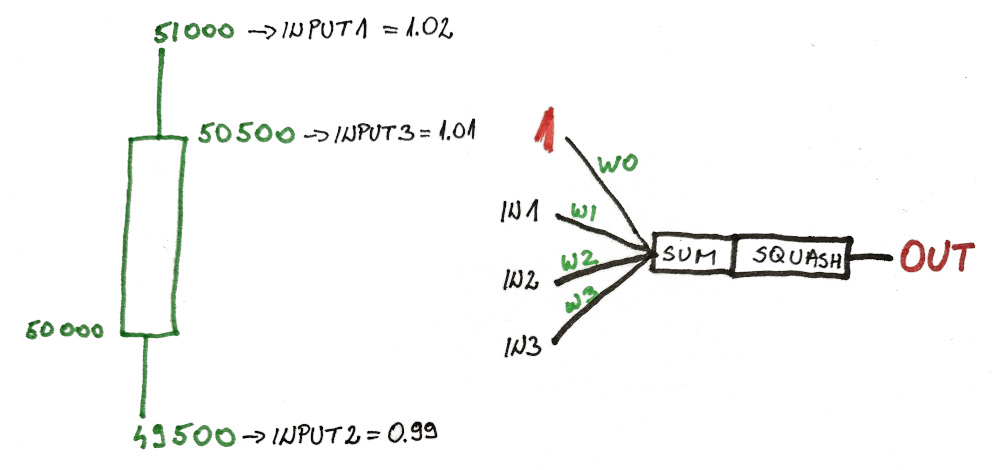

Previously, we increased the number of neurons. Now we’re going to increase the number of inputs.

We had 3+1 inputs so far (3 being for 1 candle).

And now we’re going to have 9+1 to represent 3 candles (each with it’s own high, low, close price):

- IN 0: represents constant input (bias)

- IN 1-3: represent the last candle

- IN 4-6: represent 2nd last

- IN 7-9: represent 3rd last

Now let’s see how this looks like in code…

The code

First we need to change the getInputs() function to accept 3 candles instead of one. We can do this by sending an array of candles or by explicitly defining each parameter.

For simplicity (and ease of understanding) purposes I’ll set 3 separate function arguments:

double[] getInputs(Candle c1, Candle c2, Candle c3) { double[] inputs1 = [c1.high, c1.low, c1.close]; inputs1[] -= c1.open; inputs1[] /= c1.open; double[] inputs2 = [c2.high, c2.low, c2.close]; inputs1[] -= c2.open; inputs1[] /= c2.open; double[] inputs3 = [c3.high, c3.low, c3.close]; inputs1[] -= c3.open; inputs1[] /= c3.open; return inputs1 ~ inputs2 ~ inputs3; }

And in our for() loop we need to start from the third element (so we can pass the previous two to the getInputs() function):

for (int i = 2; i < trainingCandles.length - 1; i++) { double[] inputs = getInputs(trainingCandles[i], trainingCandles[i-1], trainingCandles[i-2]);

And also, we need to increase the number of weights in the first layer so our neurons can get the information from all 3 candles:

// 1st neuron, 1st layer double[] weights1Layer1 = repeat(0.5, 10); // 9+1 // 2nd neuron, 1st layer double[] weights2Layer1 = repeat(0.5, 10); // 9+1 // 1st neuron, 2nd layer double[] weights1Layer2 = repeat(0.5, 3); // 2+1

Also note: once you train the network, you can just print out the weights and replace these random ones. Also, you can train your network during backtest and then just copy the weights for LIVE trading.

Another thing…

Since we now have more weights (23) updating them one by one might be slow. Plus, they can get stuck because the changes are too small to produce favorable outcome.

Therefore I prefer to update a small sample of weights. Usually a square root of number of weights:

√23 ~ 4.8

So we’ll just make a small update to the function that does the updates:

void updateRandomWeights() { // [-3,+3] // square root of all weights (23) is ~ 4.8 // so I'm gonna update sample of 4 each time backupWeights(); for (int i = 0; i < 4; i++) { int idx = uniform(0, 23, rand); if (idx <= 9) { weights1Layer1[idx] = uniform01(rand)*6-3; } if (idx >= 10 && idx <= 19) { weights2Layer1[idx-10] = uniform01(rand)*6-3; } if (idx >= 20 && idx <= 22) { weights1Layer2[idx-20] = uniform01(rand)*6-3; } } }

You can notice that we’re choosing the weights randomly in the range of -3 to +3 which is enough to capture any kind of behavior. The output from the sigmoid function for the value of -3 is very close to zero and for the value of +3 is very close to one.

In short, these are all the changes you need. And you can easily extend this code to include even more neurons, layers, and inputs.

Here is the code in its entirety:

module bbot.bot010; import std.stdio : pp = writeln; import std.algorithm : map, sum; import std.algorithm.comparison : min; import std.array : array; import std.algorithm.searching : minElement; import std.range : repeat, take; import std.math.trigonometry : tanh; import std.conv : to; import std.random : Random, choice, uniform01, uniform; import std.range : iota, repeat, generate, take; import c = blib.constants; import bbot.badgerbot : AbstractBadger; import bmodel.chart : Chart; import bmodel.candle : Candle; import bmodel.order : Order; import bmodel.enums : Interval, OrderType, Direction, Action, Color; import blib.utils : getStringFromTimestamp; class BadgerBot : AbstractBadger { override void setup() { this.TRADE_LIVE = false; // ATTENTION !!! // ignored when running LIVE bot (or pulling real values) this.capital = 100_000; this.transactionFee = 0.001; // 0.1% -> approx like this on Binance this.backtestPeriod = ["2024-01-01T00:00:00", "2024-04-30T00:00:00"]; // semi-open interval: [> // backtestPeriod ignored on LIVE - calculated from pastCandlesCount, candleInterval (and current timestamp) // applied always this.candleInterval = Interval._1M; this.pastCandlesCount = 1000; // example: set to 50 for 50-candle moving average (limit 1000 candles total for LIVE - for simplicity) this.tickers = [c.BTCUSDT]; // ETHUSDT //this.chart = Chart(450, 200); // comment out when you want to visualize like on LIVE but backtest will (intentionally) run slower // this.positions; // will be autofilled // this.candles; // will be autofilled super.setup(); // DON'T REMOVE } // CANDLE PATTERNS RECOGNITION bool hasOpenPosition() { return this.positions.length != 0 && this.positions[$-1].action == Action.OPEN; } bool isAIrained = false; auto rand = Random(1); double[] backupWeights1 = []; double[] backupWeights2 = []; double[] backupWeights3 = []; // 1st neuron, 1st layer double[] weights1Layer1 = repeat(0.5, 10).array; // 9+1 // 2nd neuron, 1st layer double[] weights2Layer1 = repeat(0.5, 10).array; // 9+1 // 1st neuron, 2nd layer double[] weights1Layer2 = repeat(0.5, 3).array; // 2+1 double sigmoid(double input) { return (tanh(input) + 1) / 2.0; } double[] getInputs(Candle c1, Candle c2, Candle c3) { double[] inputs1 = [c1.high, c1.low, c1.close]; inputs1[] -= c1.open; inputs1[] /= c1.open; double[] inputs2 = [c2.high, c2.low, c2.close]; inputs1[] -= c2.open; inputs1[] /= c2.open; double[] inputs3 = [c3.high, c3.low, c3.close]; inputs1[] -= c3.open; inputs1[] /= c3.open; return inputs1 ~ inputs2 ~ inputs3; } double getCorrectOutput(Candle c) { if (c.color == Color.GREEN) return 1.0; return 0.0; } Color getPredictedColor(double prediction) { return (prediction > 0.5) ? Color.GREEN : Color.RED; } double getPrediction(double[] inputs, double[] weights) { double[] weightedInputs = [1.0] ~ inputs; weightedInputs[] *= weights[]; double weightedSum = weightedInputs.array.sum; double prediction = sigmoid(weightedSum); return prediction; } void backupWeights() { backupWeights1 = weights1Layer1.dup; backupWeights2 = weights2Layer1.dup; backupWeights3 = weights1Layer2.dup; } void restoreWeights() { weights1Layer1 = backupWeights1.dup; weights2Layer1 = backupWeights2.dup; weights1Layer2 = backupWeights3.dup; } void updateRandomWeights() { // [-3,+3] // square root of all weights (23) is ~ 4.8 // so I'm gonna update sample of 4 each time backupWeights(); for (int i = 0; i < 4; i++) { int idx = uniform(0, 23, rand); if (idx <= 9) { weights1Layer1[idx] = uniform01(rand)*6-3; } if (idx >= 10 && idx <= 19) { weights2Layer1[idx-10] = uniform01(rand)*6-3; } if (idx >= 20 && idx <= 22) { weights1Layer2[idx-20] = uniform01(rand)*6-3; } } } void trainNetwork(Candle[] trainingCandles) { double maxError = trainingCandles.length; for (int n = 0; n < 5000; n++) { // 5000 times through the data //pp(weights1Layer1); double allDataError = 0; string printing = ""; updateRandomWeights(); for (int i = 2; i < trainingCandles.length - 1; i++) { double[] inputs = getInputs( trainingCandles[i], trainingCandles[i-1], trainingCandles[i-2]); double prediction1Layer1 = getPrediction(inputs, weights1Layer1); double prediction2Layer1 = getPrediction(inputs, weights2Layer1); double prediction1Layer2 = getPrediction([prediction1Layer1, prediction2Layer1], weights1Layer2); double output = getCorrectOutput(trainingCandles[i+1]); double error1Layer1 = prediction1Layer2 - output; allDataError += error1Layer1 * error1Layer1; //printing ~= to!string(inputs) ~ " : " ~ to!string(prediction1Layer1) ~ " --> " ~to!string(output) ~ "\n"; } if (allDataError < maxError) { maxError = allDataError; pp(); pp("ERROR: ", maxError); pp(weights1Layer1); pp(weights2Layer1); pp(weights1Layer2); //pp(printing); } else { restoreWeights(); } //pp(weights1Layer1); } pp(); pp("final weights:"); pp(weights1Layer1); pp(weights2Layer1); pp(weights1Layer2); pp(); pp("TRAINING COMPLETE"); pp(); } override bool trade() { if(!super.trade()) return false; // ALL CODE HERE - BEGIN // if (!isAIrained) { // training on 1000 candles prior to 2024-01-01 // see ==> this.pastCandlesCount trainNetwork(this.candles[c.BTCUSDT][0..$-1]); isAIrained = true; } Candle[] btcCandles = this.candles[c.BTCUSDT]; double currentPrice = btcCandles[$-1].close; Candle lastCandle = btcCandles[$-2]; Candle secondCandle = btcCandles[$-3]; Candle thirdCandle = btcCandles[$-4]; double[] inputs = getInputs(lastCandle, secondCandle, thirdCandle); double prediction1Layer1 = getPrediction(inputs, weights1Layer1); double prediction2Layer1 = getPrediction(inputs, weights2Layer1); double prediction1Layer2 = getPrediction([prediction1Layer1, prediction2Layer1], weights1Layer2); Color predictedNextCandleColor = getPredictedColor(prediction1Layer2); if ( predictedNextCandleColor == Color.GREEN && !hasOpenPosition() ) { Order marketBuy = Order( c.BTCUSDT, OrderType.MARKET, Direction.BUY, currentPrice * 1.003, // target price = +0.3% currentPrice * 0.995, // stop price = -0.5% ); this.order(marketBuy); } // ALL CODE HERE - END // return true; } }

This will also allow you to train even bigger networks. The only difference is that you might need to do more iterations.

Test results

In this example the network converges fairly quickly. Even after 1000 iterations it’s very good. But I set it to 5000 just in case.

For example, at the beginning, you could expect an error of approximately 500 (because we have 1000 training samples and roughly 500 of them will be wrong).

This is because our network is untrained and we can expect just random behavior and pure statistical guessing.

ERROR: 505.657 [0.5, 0.5, 0.5, 0.5, 0.5, 0.5, -2.23125, 0.5, 0.5, 2.98311] [0.5, 0.5, 2.59534, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5, 0.5] [0.5, 2.99424, 0.5]

And indeed, the error at the beginning was 505.65 (basically pure random guessing) but it gets better as we train it:

// 1.000 iterations: 249.246 // 5.000 iterations: 249.072 // 30.000 iterations: 248.171

But you can see the biggest improvement happened in the first 1000 iterations when it went from roughly 500 to 250. Cutting the error in half.

So even though this Monte Carlo is just guesswork – you can see it converges pretty fast.

Of course, you can always give it 100.000 data points and iterate 100.000 times and let it run overnight to produce better model.

In the code it boils down to replacing 2 numbers and you’re set.

Also remember, all this “AI” is just a bunch of (simple) number crunching. Remember the story of Dota2 bots that I mentioned earlier? And how they were trained on 128.000 CPU cores? What do you think they were doing?

REMEMBER:

All this “AI” hype really is – are bunch of dumb and overtrained bots.

Nothing more.

And we’re gonna build ourselves one as well… 😉

TRADING RESULTS

The trading results are surprisingly good. We only lost 80% of the account after 853 trades:

---------------------------- | PERFORMANCE | ---------------------------- - Initial capital: 100000.00 - Final capital::: 19495.85 - Total trades:::: 853 - ROI:::::: -80.50 % - Min. ROI: -80.58 % - Max. ROI: 0.30 % - Drawdown: -80.64 % - Pushup::: 1.81 % ----------------------------

And here are the results for trading without any transaction fees:

---------------------------- | PERFORMANCE | ---------------------------- - Initial capital: 100000.00 - Final capital::: 107537.93 - Total trades:::: 853 - ROI:::::: 7.54 % - Min. ROI: -9.84 % - Max. ROI: 11.18 % - Drawdown: -10.65 % - Pushup::: 23.32 % ----------------------------

Everything else considering, this bot was fairly well performing. Overall, the movements to the upside outweigh the movements to the downside. So I’m quite satisfied with it…

WHAT’S THE PROBLEM?

Nothing really, it’s just that our entire setup was wrong (intentionally, to ease the learning process).

Realize…

The next candle has very little to do with the overall price movement. For example, next candle could be going up, but the overall price over the next 10-20 candles goes down and we hit the stop loss.

What we should be teaching our network instead, is recognizing what happens with the price movement after each candle pattern. Regardless of how many candles need to pass until one of two outcomes occurs (target or stop).

And this is why I said we will fix this later… and the good news is… this “later” is in the very next lesson.

NEXT LESSON:

Predicting Target/Stop Prices with Neural Network